Introduction

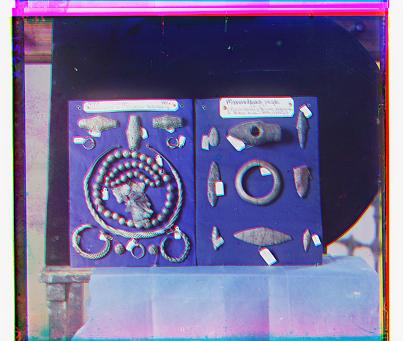

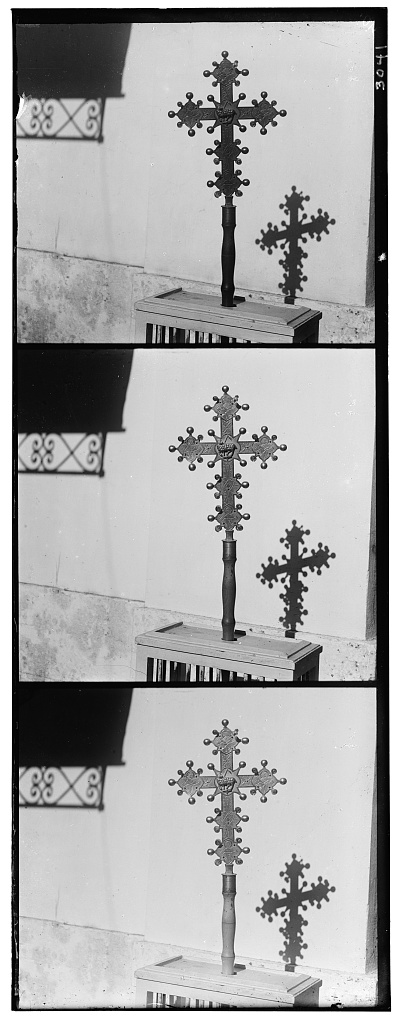

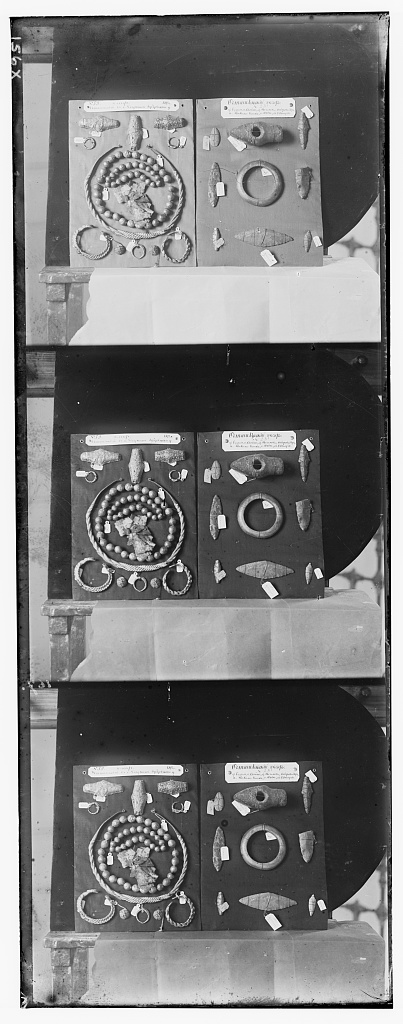

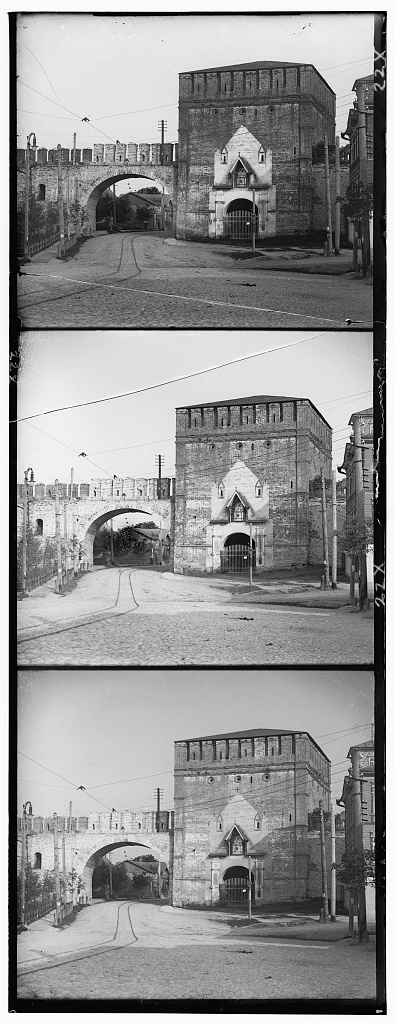

This project takes the digitized Prokudin-Gorskii glass plate images and, using image processing techniques, automatically produces a color image with as few visual artifacts as possible. In order to do this, I extracted the three color channel images, placed them on top of each other, and aligned them so they formed a single RGB color image.